Introduction of LLama 3

- Llama 3 8B

- Llama 3 8B-Instruct

- Llama 3 70B

- Llama 3 70B-Instruct

Real-world Implementations of Llama 3

Utilizing Llama 3 goes beyond just ideas, as it’s actively used in real-world scenarios. Here are some key areas where Llama 3 is making a real difference.

- Content creation: Llama 3 can be used to generate different creative text formats, like poems, code, scripts, musical pieces, email, letters, etc.

- Chatbots: Llama 3 can be integrated into chatbots to make them more intelligent and informative. This can be used for customer service chatbots, virtual assistants, and more.

- Software development: Llama 3 can be used to generate code, translate languages, and write different kinds of creative text formats, which can be helpful for software developers.

- Websites/Mobile apps: Websites can use Llama 3 to create generative AI chatbots, generate content, answer user questions and personalize the user experience.

- Social media: Meta has integrated Llama 3 into its AI chat helper, which is used on Facebook, Instagram, and WhatsApp. This means that Llama 3 can be used to power AI chatbots on these platforms.

Why is Llama 3 better?

Here are some noteworthy features

- Huge Dataset: Llama 3 was trained on a dataset comprising 15 trillion tokens, which is about seven times the size of the dataset used for Llama 2. This extensive training has significantly contributed to the models’ improved performance and capabilities.

- Context length: All variants of Llama 3 support a context length of 8,000 tokens, allowing for more extended interactions and more complex input handling compared to many previous models. More tokens mean more content that includes both the input prompt from the users and the response from the model. Token roughly translates to a word or a subset of a word.

- Improved Inference: Llama 3 both 8B and 70B versions use a technique called Grouped-Query Attention (GQA) which boosts efficiency during information retrieval.

- Better Encoding: Llama 3 uses a tokenizer with a vocabulary of 128K tokens that encodes language much more efficiently, which leads to substantially improved model performance.

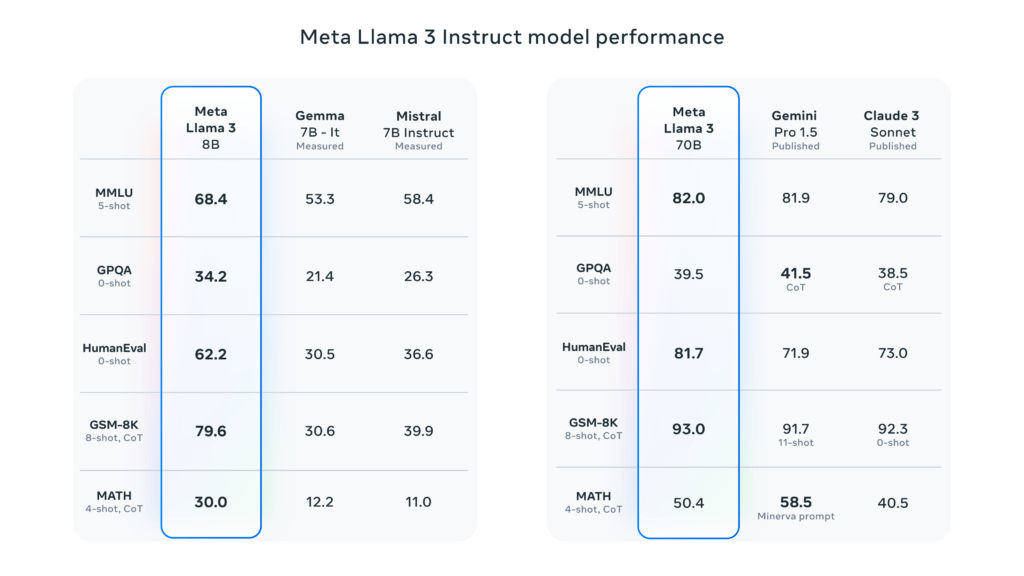

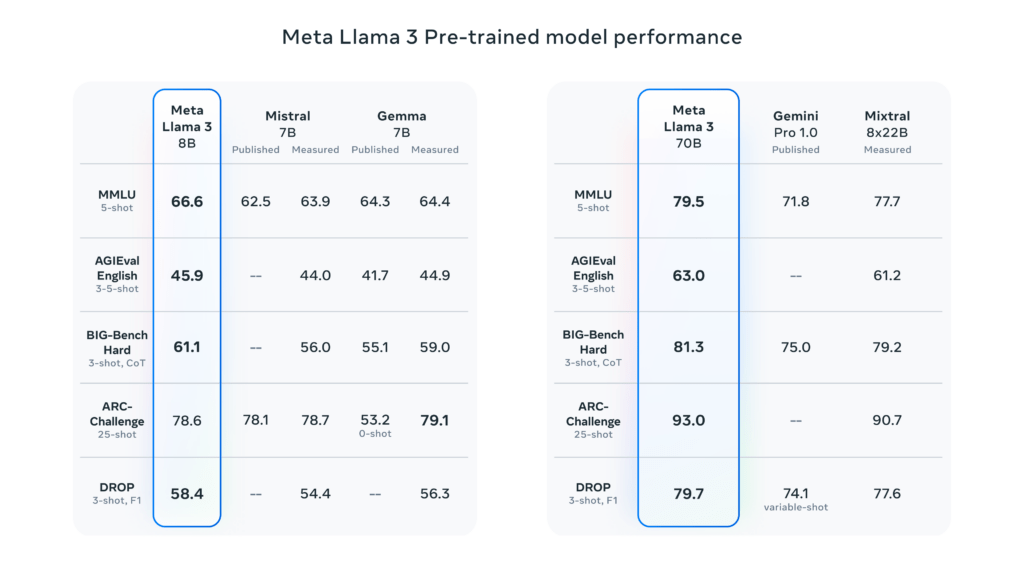

- Benchmark: Meta claims that in benchmark evaluations, Llama 3 8B surpassed other open-source AIs like Mistral 7B and Gemma 7B. Also, Llama 70B surpassed Gemini Pro 1.5, Mistral, and Claude 3.

How we can use Llama 3

With Llama 3, though, you can download the model, and as long as you have the technical chops, get it running on your computer or even dig into its code.

Llama 3 models are integrated into the Hugging Face ecosystem, making them readily available to developers. Developers and researchers rely on Hugging Face to download these models. This integration with Hugging Face includes tools like transformers and inference endpoints, facilitating easier adoption and application development.

Llama 3 is also available from model-as-a-service providers such as Perplexity Labs and Fireworks.ai, as well as cloud provider platforms such as Azure ML and Vertex AI.

Also, you can access the Llama 3 repository to access the code and use it.